White House Says Amazon, Google, Meta, Microsoft Agree to AI Safeguards

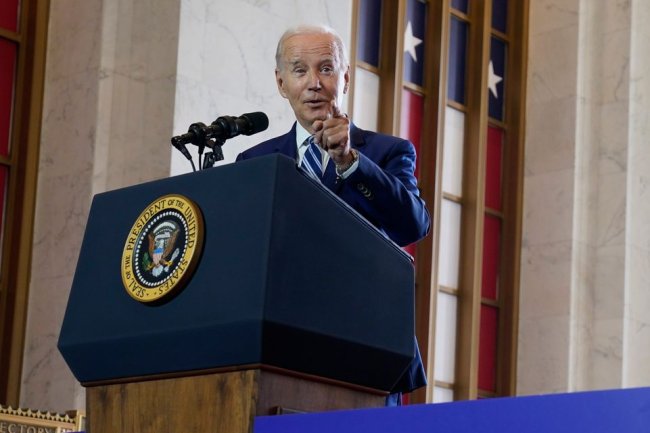

Tech companies adopt voluntary guidelines, such as watermarking artificial content President Biden announced a deal with seven tech companies to put more safeguards in AI technologies. Photo: Andrew Caballero-Reynolds/AFP/Getty Images By Sabrina Siddiqui and Deepa Seetharaman Updated July 21, 2023 2:03 pm ET WASHINGTON—The Biden administration says it has reached a deal with big tech companies to put more guardrails around artificial intelligence, including the development of a watermarking system to help users identify AI-gener

President Biden announced a deal with seven tech companies to put more safeguards in AI technologies. Photo: Andrew Caballero-Reynolds/AFP/Getty Images

WASHINGTON—The Biden administration says it has reached a deal with big tech companies to put more guardrails around artificial intelligence, including the development of a watermarking system to help users identify AI-generated content, as part of its efforts to rein in misinformation and other risks of the rapidly growing technology.

The White House said seven major AI companies— Amazon.com, Anthropic, Google, Inflection, Meta Platforms, Microsoft and OpenAI—are making voluntary commitments that also include testing their AI systems’ security and capabilities before their public release, investing in research on the technology’s risks to society, and facilitating external audits of vulnerabilities in their systems.

On Friday, most of the companies issued statements saying they would work with the White House, while also emphasizing that the guardrails were voluntary. Leaders from the companies will meet with President Biden at the White House on Friday.

“By moving quickly, the White House’s commitments create a foundation to help ensure the promise of AI stays ahead of its risks,” said Brad Smith, president of Microsoft, which earlier this year made a multibillion-dollar investment in OpenAI. In a separate statement, OpenAI said the voluntary commitments outlined Friday would “reinforce the safety, security and trustworthiness of AI technology and our services.”

There aren’t enforcement mechanisms for the commitments outlined on Friday, and they largely reflect the safety practices already implemented or promised by the AI companies involved.

The announcement comes as Biden and his administration have placed an increased emphasis on both the benefits and pitfalls of AI, with a broader goal of developing safeguards around the technology through both regulation and congressional action. Biden convened a meeting with AI experts and researchers in San Francisco last month and hosted the CEOs of Google, Microsoft, Anthropic and OpenAI at the White House in May.

In remarks at the White House on Friday, Biden said AI poses risks and opportunities. “We’ll see more technology change in the next 10 years, or even in the next few years than we’ve seen in the last 50. That has been an astounding revelation to me, quite frankly,” he said, adding, “This is a serious responsibility. We have to get it right.”

Amazon said it was committed to collaborating with the White House and others on AI. “Amazon supports these voluntary commitments to foster the safe, responsible, and effective development of AI technology,” it said in a statement.

Seven leaders from tech companies met with President Biden at the White House on Friday.

Photo: Anna Moneymaker/Getty Images

Before OpenAI launched its GPT-4 model in late March, the company spent roughly six months working with external experts who tried to provoke it to produce harmful or racist content. Most companies also developing large language models rely heavily on humans who teach these models to be engaging and helpful and avoid generating toxic responses through a process called reinforcement learning with human feedback.

OpenAI also introduced a bug bounty program in April to reward security researchers who spotted gaps in the company’s system. Some of the companies, including Anthropic, talk about their safety testing methods in academic papers and on their websites and have ways for users to flag problematic responses.

Many of the companies are also exploring ways to tag images made by AI, a step that could potentially avoid the uproar caused in late May when a fake photo of an explosion at the Pentagon went viral online and caused a momentary dip in the stock market. OpenAI’s image-generating system Dall-E produces images with a rainbow watermark at the bottom. Google said this spring that it would embed data inside images created by its AI models that would indicate they are synthetic.

SHARE YOUR THOUGHTS

What regulations should the government put in place regarding AI? Join the conversation below.

But not every image-generation model follows this rule, and it remains simple for people to remove indications that an image is AI-generated. OpenAI’s content policy allows users to remove the watermark, and there are instructions on sites such as Reddit that explain to users how to eliminate those details.

The guidelines outlined Friday don’t require companies to disclose information about their training data, which experts say is crucial to combat bias, prevent copyright abuse and understand models’ capabilities.

A White House official said that under its agreement, the companies will develop and implement a watermarking system for both visual and audio content. The watermark would be embedded into the platform so that any content created by a user would either identify which AI system created it or that it was AI-generated, that person said.

White House officials have said their hope is to establish rules around artificial-intelligence tools sooner rather than later, citing lessons learned from Washington’s inability to crack down on the proliferation of misinformation and harmful content on social media. The new commitments, they noted, aren’t a substitute for federal action or legislation, and the White House is actively developing an executive order “to govern the use of AI.”

White House chief of staff Jeff Zients said the commitments put in motion external checks and balances that would ensure technology companies aren’t simply holding themselves accountable.

“It’s not just the companies and them doing a good job and making sure that their products are safe and that they’re pressure tested,” he said. “But also, they can’t grade their own homework here.”

Artificial intelligence is taking on a larger role in white-collar work, with the ability to draft emails, presentations, images and more. Workers have already lost their jobs to the tech, and some CEOs are changing future hiring plans. WSJ explains. Illustration: Jacob Reynolds

—Andrew Restuccia contributed to this article.

Write to Sabrina Siddiqui at [email protected] and Deepa Seetharaman at [email protected]

What's Your Reaction?